ChatGPT really does offer mundane utility

It’s practically useful, and not currently on track to kill us all

Hi everyone! Welcome to part two of the discussion of my new paper “How People Use ChatGPT”, written with an all-star team of OpenAI researchers. Here is part one, where I talk about the growth of ChatGPT and the privacy implications of our research.

Tonight at 8pm EST I will speak at the OpenAI Forum’s Future of Work series along with my coauthor and OpenAI chief economist Ronnie Chatterji. If you’re interested, you can sign up at the link!

Today I want to explore how ChatGPT is used. In the paper we used gpt-5 to classify messages along several dimensions. These include whether the message was work-related, the broad topic of the conversation, the user’s intent, the user’s level of satisfaction, and whether it related to common definitions of various job tasks. Classifying messages is an art rather than a science because no two messages are alike and because you rarely see the full context behind a user query.

In addition, we faced the tricky issue of whether to classify single messages or entire conversations. Full conversations provide the most context, but many people use ChatGPT only within one never-ending conversation, which jumps back and forth between topics. On the other hand, single messages often don’t provide enough context. So we pursued a middle ground by classifying single messages but allowing the model to use the prior 10 messages in the conversation as context. We also validated each of our classification prompts against human judgment of a set of messages fromWildChat, as I discussed in the previous post. See Appendix B of the paper for more detail on how well we did.

Work usage is growing, but personal usage is growing faster

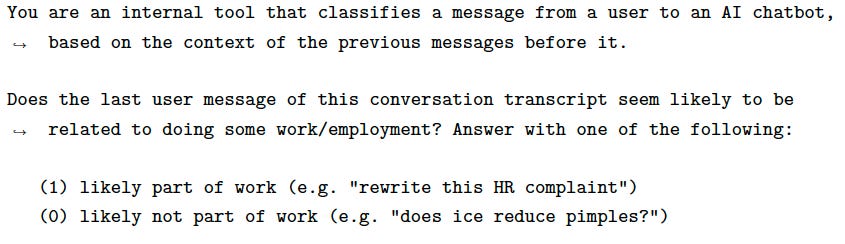

Here is the exact text of the prompt we used to classify messages that were work-related:

To be clear, we did not give the model any information about the user such as their education or occupation, even if that would probably improve its guess.

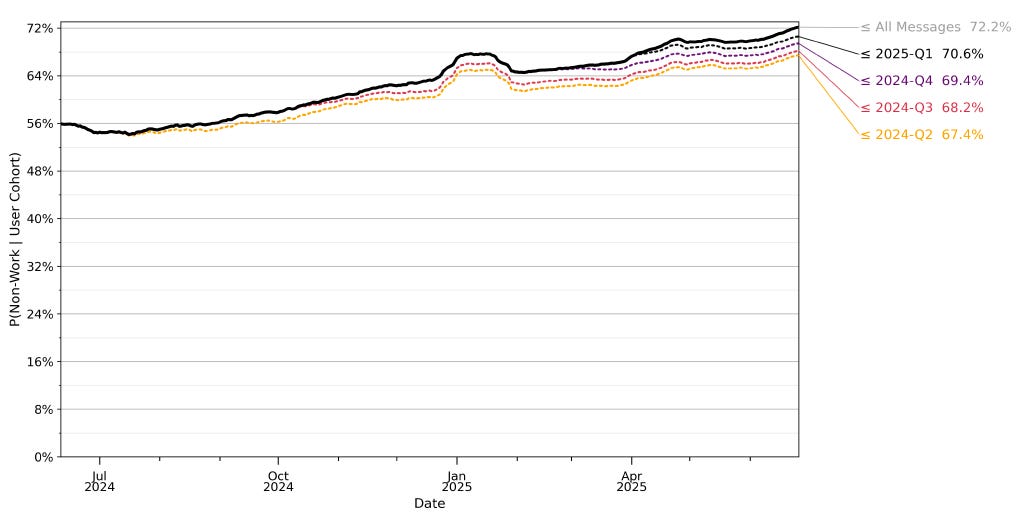

In June 2024, messages were split roughly evenly between non-work and work. Over the next year work messages grew by 3.4x, but non-work messages grew by 8x and now comprise 73% of all messages sent by ChatGPT users. The Figure below shows the share of messages that are unrelated to work by user signup cohort. More than two thirds of the growth in non-work usage occurred within cohort rather than across cohort. In other words, the growth in non-work messages is not because new signups are more interested in personal use. Rather, as users stay on the platform longer, non-work messages become relatively more frequent.

What are the most common ChatGPT conversation topics?

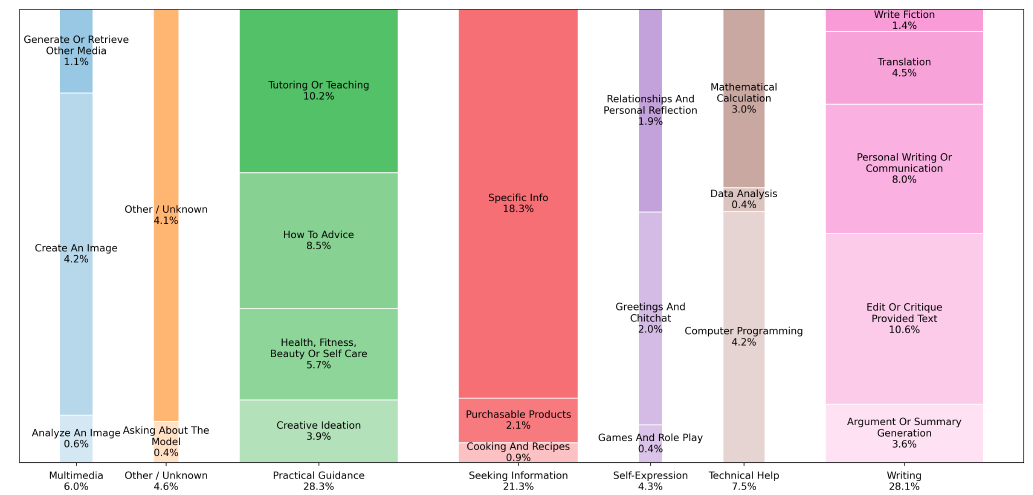

We modified a classifier used by internal research teams at OpenAI to categorize messages into one of 24 mutually exclusive and collectively exhaustive topics. We then aggregated those 24 topics up to 6 larger categories, which are color coded along with the full list in the figure below.

The most common of the 24 topics is Specific Information, which is just searching for facts about people, places, and things. We add Purchasable Products and Cooking and Recipes to it to get the larger category of Seeking Information, which is 21.3% of all messages. Seeking Information is the closest substitute for traditional web search.

The largest of the 6 topics is Practical Guidance at 28.3%. The largest sub-topic is Tutoring and Teaching at 10.2%, making education one of the largest use cases of ChatGPT. People are sending more than 260 million learning-related messages per day. As you might expect, this category fluctuates with the timing of the school year. The other sub-topics are How-To Advice, Health/Fitness/Beauty/Self-Care, and Creative Ideation. Appendix A.3 gives specific examples of some messages that count in each category.

Practical Guidance differs from Seeking Information because it is interactive and “hands on”. ChatGPT can customize a study plan or a workout routine for your specific needs and modify it flexibly in response to follow-up questions. Web search is static.

Writing is one of the most common use cases of ChatGPT, comprising 28.1% of all messages. The most frequent sub-topic within Writing is Edit or Critique Provided Text at 10.6%. Another common use case is Translation, at 4.5%. These two categories add up to more than half of all Writing messages, which is interesting because they are both cases where ChatGPT is modifying user text rather than generating text from scratch.

Practical Guidance, Writing, and Seeking Information collectively account for nearly 80% of all ChatGPT messages. Much lower down the list are two topics that have been the focus of a lot of public conversation about ChatGPT – Technical Help and Self-Expression. Computer Programming is the largest sub-topic within Technical Help at 4.2% of all messages. That’s a much smaller share than I expected to see, and it reflects the broadening of ChatGPT usage far beyond the early power-users who were much more focused on writing code.

A recent Harvard Business Review article argued that the top use case for Generative AI was “Therapy and Companionship”. However, this was based on an analysis of conversations from online forums like Reddit and Quora rather than internal message data from ChatGPT or other chatbots. That’s not true at all in our data. The corresponding category, Relationships and Personal Reflection, accounts for only 1.9% of all messages.

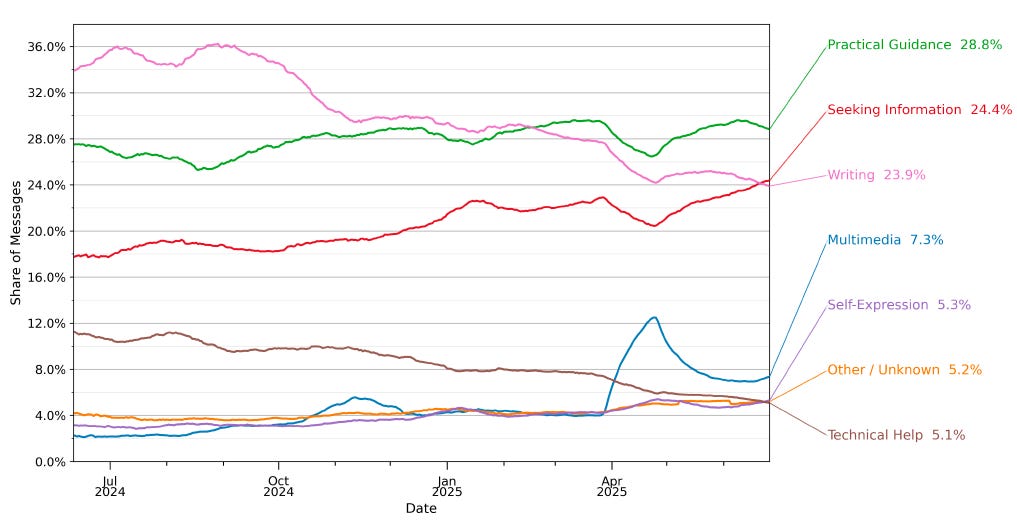

The figure below shows the trend over the last year in message shares for the 6 broad conversation topics.

In the last year, Writing has declined from 34% to 24% and Technical Help has declined from nearly 12% to 5% of all messages. Seeking Information and Multimedia are the big risers, with the latter reflecting the addition of image generation capabilities in GPT-4.

Which conversation topics are more likely at work? Writing dominates at about 40% of all work messages. Technical Help jumps from 5.1% of all messages to 10% of all work messages. Seeking Information messages are much more likely to be related to personal use rather than work use.

Asking, Doing and Expressing

Existing research on the possible labor market impacts of generative AI focuses on what the technology can do better (or cheaper) than humans. For example, several studies have mapped LLM capabilities to job tasks and then asked which occupations are most “exposed” to generative AI.

Yet when you look at what people actually use ChatGPT to do, rather than what it is capable of doing, you see a much broader picture. People do use ChatGPT to get things done faster, but they also use it as an advisor, a guide, an oracle, and a mentor. That doesn’t map very well onto job tasks. A very nice paper by Ide and Talamas called “Artificial Intelligence in the Knowledge Economy” distinguishes between 1) AI agents that act as co-workers performing tasks; and 2) AI as a co-pilot that helps you solve problems you couldn’t solve on your own.

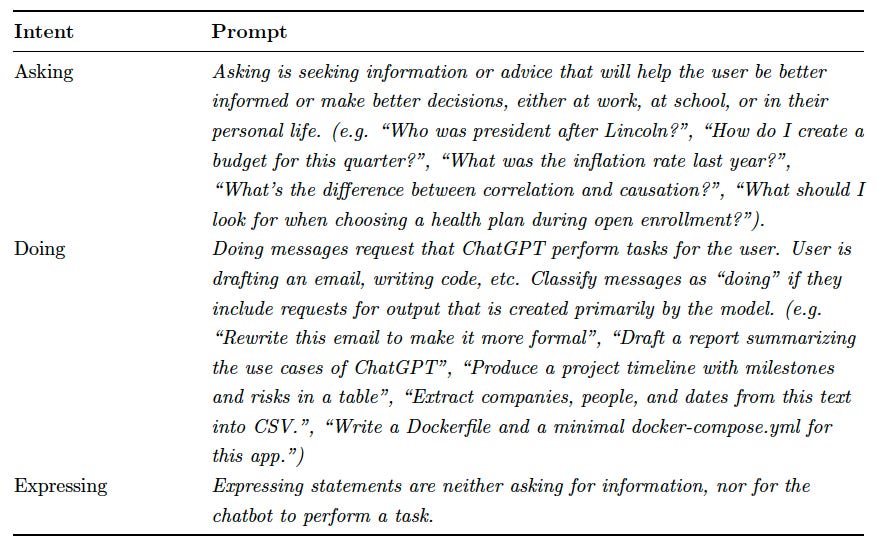

Our attempt to distinguish between these use cases is a classifier of user intent that we call Asking, Doing, or Expressing. Below is the relevant prompt snippet:

Think about Doing messages as delivering output (e.g. a written passage, some lines of code) that can plugged into a production process and Asking messages as providing information or supporting decision-making. Expressing messages have little or no economic content.

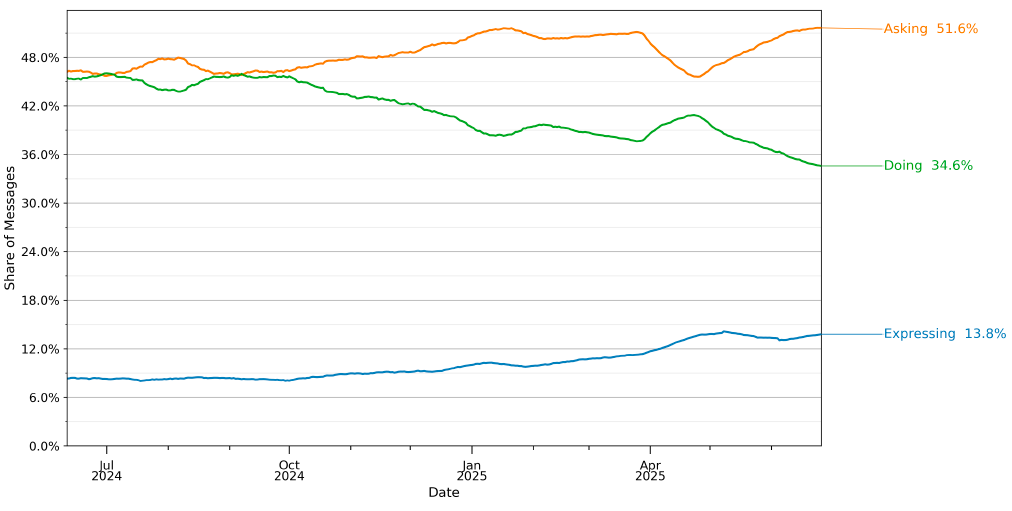

The figure below shows that Asking is the most common use case, comprising 52% of all messages as of July 2025. A year ago, messages were split evenly between Asking and Doing, but Doing has declined.1

We find decent but imperfect overlap between conversation topics and Asking/Doing/Expressing. Seeking Information is about 90% Asking, compared to about 70% for Practical Guidance. Writing is by far the most common topic of Doing messages – in fact, Writing/Doing comprises about 35% of all work-related messages. Multimedia is about 80% Doing. Technical Help is evenly split between Asking and Doing. This suggests that computer programmers may be splitting their queries between asking ChatGPT to write some code for them and figuring out how to debug existing code, for example.

How do ChatGPT messages map to work activities?

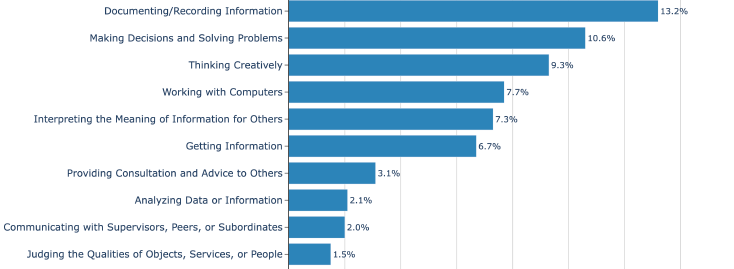

We map message content to work activities using the Occupational Information Network (O*NET) database, following the approach used in a recent study of Microsoft Co-Pilot users. O*NET – which is supported by the U.S. Department of Labor – systematically categorizes jobs according to the skills, tasks, and work activities required to perform them. They ask workers, employers, and trained observers what people do all day at work and then map their responses to thousands of different job tasks. O*NET then aggregates these tasks up to three levels of detail. We classify messages into one of 332 intermediate work activities (IWAs) and then use the official O*NET taxonomy to aggregate them up to one of 41 Generalized Work Activities (GWAs). Appendix A.4 gives an example of one of our O*NET classification prompts.

The figure below lists the top 10 GWAs associated with work-related messages. The top five categories –Documenting/Recording Information, Making Decisions and Solving Problems, Thinking Creatively, Working with Computers, and Interpreting the Meaning of Information for Others – account for nearly half of all messages. No category outside of the top 10 accounts for more than 1% of total message volume.

These results suggest that ChatGPT use is concentrated heavily in only a small set of work tasks related to 1) seeking and analyzing information, and 2) problem-solving and decision support. Is that because the heavy users are all in information-intensive occupations?

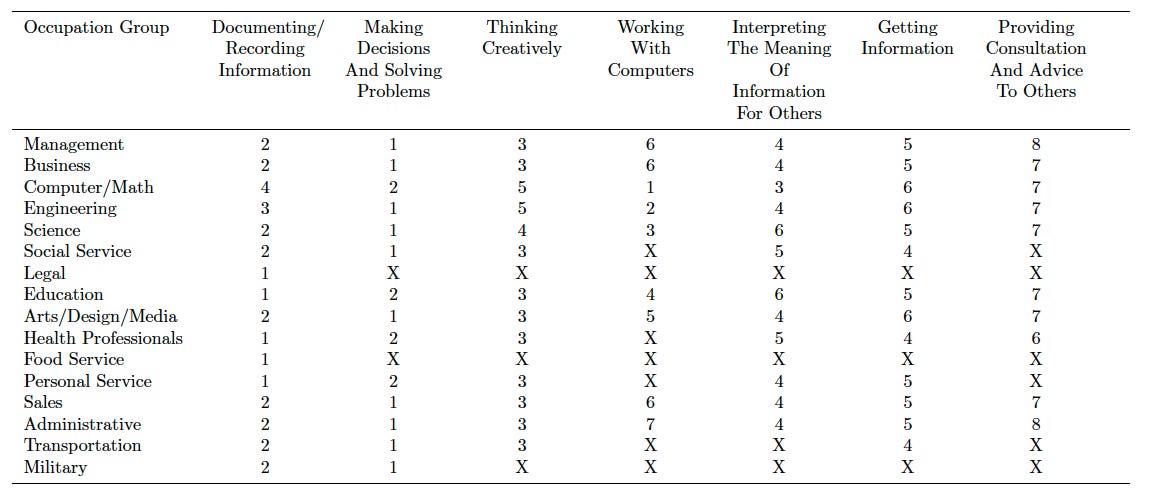

Not really. We linked the classification of work activities to users’ actual occupations, using the Data Clean Room (DCR) methodology discussed in part one. The table below presents the ranking of each GWA within each occupation category.2

Making Decisions and Solving Problems ranks in the top two of every single occupation category where we had enough data to report on at least two GWAs. Documenting/Recording Information also ranks highly in all the occupations. People in very different occupations seem to be using ChatGPT in similar ways. I find it extremely surprising that Making Decisions and Solving Problems is the most frequent GWA in Sales, rather than something like Selling or Influencing Others. Or that it is more common in Education than Training or Teaching Others.

Overall, ChatGPT provides mundane practical value both at work and in people’s personal lives. At the same time, the mapping of messages to work activities seems highly concentrated in activities related to information analysis and decision support that do not map very well onto practical tasks.

Note that this differs from the recent Anthropic Economic Index report, which found an increase in “Directive” conversations where users delegate tasks completely to Claude. Their prompt differs from ours. But more importantly, they only classify messages that can be mapped to job tasks, whereas we are studying all user messages. Not surprisingly, Doing messages are more likely to be work-related.

An “X” in the table means that there were not enough observations in the cell to meet our user privacy protection standards. You’ll note that the Xs are never out of order, meaning the rankings go 1,2,X,X…This indicates that the GWAs not listed in the table were also too rare to report. The table notes discuss the small number of examples where that was not the case.

David, my hunch regarding technical help being so low is that programmers are using Co-Pilot and Claude. I find those better for coding help than ChatGPT. In other words, savvy users may self-sort among chatbots. What do you think?

Yes. And AI can play an important role in campus civil conversation *if* universities allow it. https://www.insidehighered.com/opinion/views/2025/09/16/about-fires-free-speech-rankings-opinion